This is how AI will speed up peer reviews

Mention AI to some people, and they may think of sentient, all-knowing computers intent on destroying human life. Mention to researchers who have submitted a manuscript to a journal that AI may help evaluate their submission, and you’ll probably be subjected a similar level of recoil. “That can’t be right”, “a bot is going to reject my manuscript”, “I’ll never submit to this journal again”.

One of my favorite maxims about AI is that it doesn’t stand for artificial intelligence, it stands for augmented intelligence. It augments human decision making – it doesn’t replace it. There are some myths to dispel about AI in scholarly communication, and how it is used in manuscript evaluation in particular. As we discuss trust in this year’s Peer Review Week, I hope to be able to show how AI is being used to aid human decision making, and how this, in turn, benefits both the author and reviewer, while speeding up the whole peer review process.

An eye-catching statistic is the scale of output of researchers in recent years. Over 3 million papers were published in over 33,000 journals in 2018. Now multiply each of those accepted papers by 2, and you get an idea of the number of peer reviews required to support this output. Multiply the accepted papers by 30-50%, and you get an idea of the overall number of submissions to journals.

In addition, all of these numbers are growing by 6-8% year-on-year, so you can appreciate the problems journals face in finding reviewers, keeping their publication times reasonable, and identifying articles that are not suitable for their journal. Let’s follow a typical manuscript from the point of submission through to a final decision, and how AI can help make this process smoother, quicker, and – well – even enjoyable!

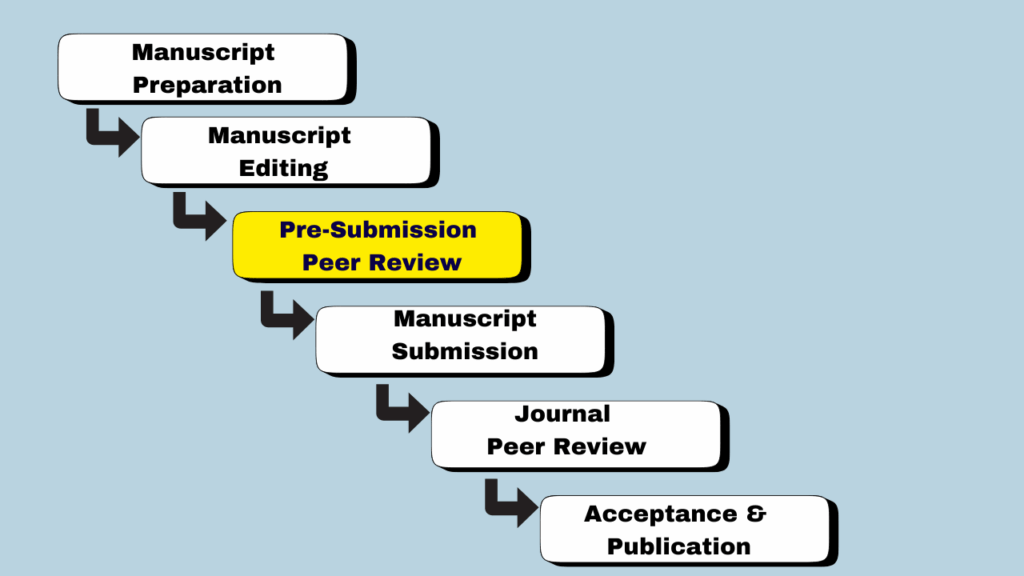

Submission

When an article is submitted to a journal, the first thing that happens is that someone in the editorial office will screen the manuscript and determine if the submission is one that is suitable for the journal. This usually involves evaluating content match with journal aims and scope, structure of the article (and article type), and whether the article contains large chunks of plagiarised text. And while this can happen in a few minutes, if you multiply this by 1000s of submission for larger journals, you can see that this is something that takes significant time overall.

But it’s not only time that can be a concern, transparency and consistency are also problematic. Individuals can come to different conclusions about the same paper for different reasons.

This is where some AI solutions can help. When a manuscript is submitted to a journal, they can be screened for content match in a smart, semantic way. Tools exist that extract the concepts described in the manuscript and match them to concepts the journal is publishing, allowing the in-house editors to quickly determine if there is a content match between the journal and incoming paper.

It is also possible for screening programs to extract the titles of each section, again allowing a decision to be taken on whether this is an article type the journal accepts. Finally, the quality of the language can be graded to make sure it reaches a standard where a peer reviewer can make a decision on the quality of the research.

If a manuscript fails any of these tests, the editorial office staff can double check to make sure the automated assessment is correct – and if so, the manuscript may be desk-rejected. This isn’t a great end to a submission process, but at least this way it can happen in few hours rather than a few weeks.

Finding reviewers

As explained earlier, finding reviewers is getting harder and harder each year for journals. Anything that can help in finding reviewers who agree to review for a journal is to be welcomed. Here AI helps again, by finding authors who have previously published in similar subjects and suggesting them as reviewers. Systems should also check all author names, so as not to invite a co-author to review, and names mentioned in the Acknowledgements.

It’s a mundane process – and one an author has no responsibility over – but it is one of the steps, which takes the most time in publishing a manuscript. If AI is to speed things up here, it is to be welcomed.

The peer review process

Peer reviewers who agree to review a manuscript are to be highly valued. What can we do to make their lives easier, the review process enjoyable, and the time to return a review, shorter? We share with them the results of the various evaluations we ran at the point of submission. This has two main benefits: it reassures them that the ‘boring’ stuff has already been assessed and judged to be OK, and it allows them to concentrate on where their expertise is, the content of the manuscript. By letting reviewers concentrate on this, and this alone, it is to be hoped the whole process of reviewing is streamlined, easier, and – for the reviewer at least – more enjoyable.

AI can be used in a number of ways, but when used appropriately and ethically, it is a great tool to aid human decision making in the editorial process. If you want to know more, CACTUS has a range of solutions to help both publishers and research authors speed up the peer review process.