What can journals do to increase researcher trust in peer review? An expert opinion

As naïve graduate students, many of us are trained to perceive the peer review process as fundamental to scientific publishing, a check on research to ensure the highest quality papers are published. However, within 10 years of peer review becoming established as the norm, criticisms of this system were already mounting. In 1845, an article described peer reviewers ‘as scheming judges quite possibly “full of envy, hatred, malice, and all uncharitableness”.’1 The fundamental problem being that peer review is like a black box and the box is not equal across publishers, journals, editors, and reviewers.2 In more recent years, these criticisms have led to a suite of studies on the peer review process that provide clear evidence of the flaws and biases across publishers, editors, and reviewers.3,4,5,6 Essentially, scientific publishing is similar to an pyramid scheme, with the majority of less experienced, less established, less well-connected researchers sitting at the bottom of the scheme with those at the top staying at the top. But even the top researchers, editors and publishers are frustrated with the state of the peer review system.

The issues with the peer review process are exacerbated by the size of the global scientific community and with the internet allowing for fast communication and distribution of new research and ideas. Many journals and editors receive far more submissions than can be published and they often struggle to find enough peer reviewers. Then peer reviewers themselves generally have their own time pressures that likely impact their ability to adequately review manuscripts in a timely manner. For example, many journals ask for a 10-day turn around on reviews. With the time pressures, this often results in rushed reviews lacking in rigor or passed down to inexperienced students. So, the volume of manuscripts being handled, and associated time pressures introduce inherent biases associated with quick assessments. It is no wonder that there is a major trust deficit in the peer review – lack of transparency, slow speed, biased with no evidence that publication quality is improved by the process.7 But yet for researchers, a strong publication record is fundamental to career success – to secure grant funding, tenure, and so on. In countries like the UK, this pressure to publish is intensified by the Research Excellence Frameword system and associated financial incentives.

So, what can or should be done to improve trust in the peer review system? What can journals and publishers do to build up trust?

From my perspective, the peer review system needs a major overhaul to become fairer and more efficient, while also continuing to evaluate the integrity and importance of each manuscript. To achieve this, the peer reviewing may need to be standardized across journals and publishers but it is difficult to say how best to achieve this overhaul given the broad differences in approaches across publishers and journals. Journals do seem to be actively testing and trying potential solutions, but so far all have flaws. For example, double blind peer review can be effective in reducing bias, if the reviewers and authors are not able to identify each other from their research. Whereas other journals now ask reviewers to sign their reviews to increase transparency; but risk influencing the review process. Open and interactive reviews should reduce bias and improve transparency in the process, but they are time consuming. Also, it can be hard to find willing reviewers that can commit to the full process. Pre-print servers can be useful in getting feedback to improve a manuscript ahead of submission; but again they are slow and they do not guarantee publication. One publisher is trialing a closed review system where a paper is posted on a review board with a pool of 80+ researchers invited to comment on the manuscript, which allows for pre-publication review before it is available to the wider internet. More recently, prospective peer review in the form of registered reports7 has been proposed to help evaluate the quality of the question and research design rather than the outcome.

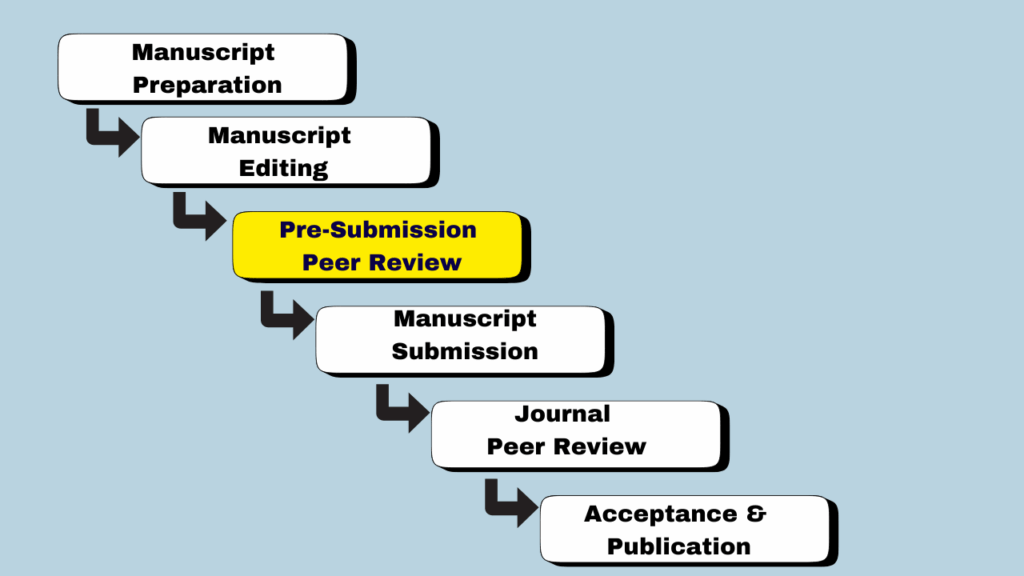

These options generally target reviewer bias. Many authors struggle to get their manuscripts past the editor’s desk, often rejected with short letters that do not clearly justify the rejection. Thus, it would be useful if editors were able to properly justify desk rejections, even a quick tick box of what criteria the manuscript was lacking may help authors publish their work elsewhere. Scientific Editing services at companies like Cactus Communications are useful for improving any language/content-associated issues before submission to improve chances of going out for peer review, but they cost money which is likely limiting for some authors. Perhaps it would it be useful for publishers to establish a pre-submission ‘volunteer system’ where authors could send their manuscripts to another researcher (in slightly different fields to avoid conflict) for feedback ahead of submission, including help with language, content. This would serve a dual purpose of aiding authors in need of assistance and increasing the pool of experienced peer reviewers available. Reviewers would be provided standardized training, perhaps as part of graduate school, and would receive some compensation to encourage participations, such as service contribution on a C.V. (a few publishers already offer discounts on APC chargers for reviewers).

Do any of these ideas (preprint servers, interactive review, editing services, volunteer manuscript assistance) necessarily build trust in the peer review system? I am not convinced. But they might level the playing field a little bit. In the meantime, I take heart that the community is working towards a solution – by studying the peer review process itself. 7 By continuing to improve our understanding of peer review, we should be able to use this knowledge to develop an evidenced-based peer review system that works.

This article is part of the CACTUS Peer Review Week 2020, which brings together curated articles, expert-led webinars, and insights from top peer reviewers across the world.

References

1. Wade’s London Rev. 1, 1845.) in Peer review troubled from the start. 2016. https://www.nature.com/news/peer-review-troubled-from-the-start-1.19763

2. Peer review: reform or revolution? Time to open up the black box of peer review. 1997. https://doi.org/10.1136/bmj.315.7111.759

3. Peer review: a flawed process at the heart of science and journals. 2006. https://dx.doi.org/10.1258%2Fjrsm.99.4.178

4. Sexism and nepotism in peer-review. 1997. https://doi.org/10.1038/387341a0

5. Peer-review practices of psychological journals: the fate of submitted articles, submitted again. 1982. https://psycnet.apa.org/doi/10.1017/S0140525X00011183

6. Deceit and fraud in medical research. 2006. https://www.sciencedirect.com/science/article/pii/S1743919106000471

7. The limitations to our understanding of peer review. 2020. https://doi.org/10.1186/s41073-020-00092-1

8. Registered reports: prospective peer review emphasizes science over spin. 2019. https://doi.org/10.1016/j.fertnstert.2019.03.010

International Mother Language Day: Rethinking Peer Review

February 19, 2026