Biases in scientific publication: A report from the 2017 Peer Review Congress

Given the fact that the International Congress on Peer Review and Scientific Publication is held once in four years, there is no time to waste! Day 1 began with breakfast at 7 AM and at 8, we had about 500 members of the scholarly community sitting alert in anticipation of the talks to follow. Lisa A. Bero set the tone of the first series of sessions with compelling solutions to re-establish trust in peer review, especially pointing out that while we believe in peer review, we may not have understood its true nature and purpose.

She also introduced some of the concepts that the upcoming presenters planned to discuss. The sessions that followed focused on peer review biases related to conflict of interest as well as the reporting and publication of research.

Quinn Grundy (Charles Perkins Centre, Faculty of Pharmacy, the University of Sydney, Australia) showed results from her group’s study: while conflict of interest disclosures are a common requirement in biomedical research, they typically provide either too much or too little information, making it difficult for reviewers to assess this aspect.

Next, Camilla Hansen (Center for Evidence-Based Medicine, Odense University Hospital and University of Southern Denmark) discussed her group’s study on how industry funding and financial conflicts among authors could influence the outcomes and quality of systematic reviews. Incidentally, this study found that systematic reviews with financial conflicts of interest related to industry sponsors often have favorable conclusions compared to reviews without financial conflicts of interest.

Sharing results from an analysis of authors’ preference for single- or double-blind peer review, Elisa De Ranieri (Springer Nature) talked about how she and her co-authors set out to explore whether there was a bias towards a particular form of peer review at Nature journals. The findings of this study were interesting as they indicated how a simple audit could help a journal identify major trends or issues in its workflow.

While talking about gender- and age-related biases in peer review among earth and space science journals, Jory Lerback (Department of Geology and Geophysics, University of Utah) shared some hard facts about the under-representation of women in publications for these fields. She also shared details of an American Geophysical Union (AGU) experiment that reminded authors to help improve diversity in peer review.

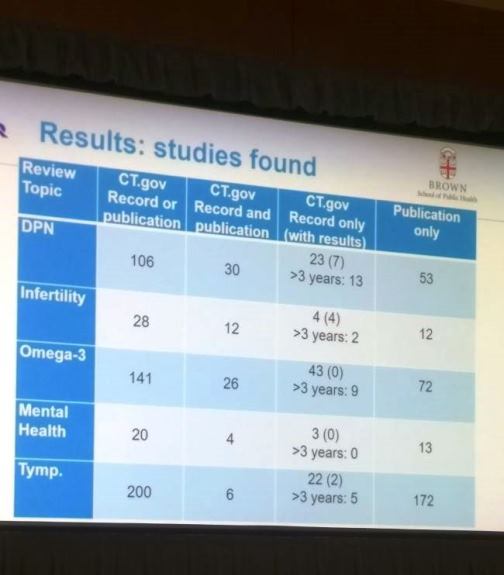

The next set of presentations showcased results of studies that analyzed biases in the reporting and publication of research. Stacey Springs (Brown University Evidence Based Practice Center, Providence, USA) pointed out problems with the registration of clinical trials and the availability of the registered data. Only 24% of the studies in this research were registered in ClinicalTrials.gov and 38% (95 of 251) of studies did not have results published in peer-reviewed literature. This seemed to indicate that not all registered trials were published as full studies, leaving a gap in the conclusions of the trial. An interesting audience question here was whether we should encourage authors from outside the US to register their trials in ClinicalTrials.gov, which is a US-specific database.

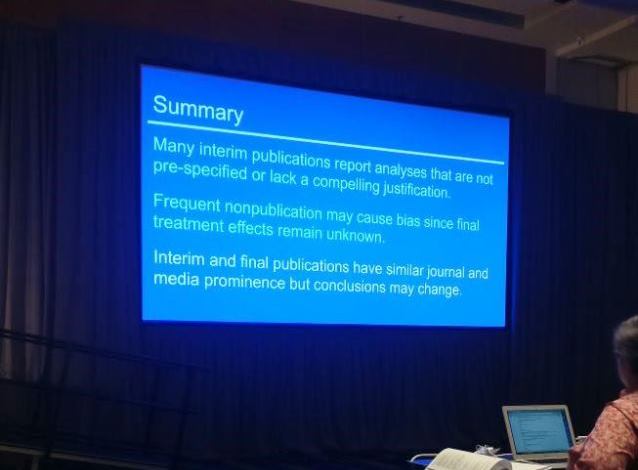

It is not uncommon for authors to consider publishing interim results from a study before completing their full research. But do the final results receive as much attention as the interim findings? Steven Woloshin (The Center for Medicine in the Media, Dartmouth Institute for Health Policy and Clinical Practice, Lebanon, USA) and colleagues found that the prevalence of interim result publications may have been underestimated thus far.

In the pre-lunch breakout session, Mona Ghannad (Academic Medical Center Amsterdam, Department of Clinical Epidemiology, Biostastistics and Bioinformatics, Amsterdam Public Health Research Institute, the Netherlands) brought up the highly interesting concept of “spin” in published biomedical research. Spin refers to the over-interpretation of results and should not be confused with data falsification, fabrication, etc.

All abstracts of Day 1 of the Congress are available here.

During the breaks, the Editage booth was a busy place, with attendees walking in to check out the giveaways and handouts at the booth.

Here are some pictures from the conference:

The session begins

The prevalence of interim result publications may have been underestimated thus far.

Related reading:

- Research integrity and misconduct in science: A report from the 2017 Peer Review Congress

- Quality of reporting in scientific publications: A report from the 2017 Peer Review Congress

- Exploring funding and grant reviews: A report from the 2017 Peer Review Congress

- Quality of scientific literature: A report from the 2017 Peer Review Congress

- Editorial and peer-review process innovations: A report from the 2017 Peer Review Congress

- Post-publication issues and peer review: A report from the 2017 Peer Review Congress

- Pre-publication and post-publication issues: A report from the 2017 Peer Review Congress

- A report of posters exhibited at the 2017 Peer Review Congress

- 13 Takeaways from the 2017 Peer Review Congress in Chicago

Download the full report of the Peer Review Congress

This post summarizes some of the sessions presented at the Peer Review Congress. For a comprehensive overview, download the full report of the event below.

An overview of the Eigth International Congress on Peer Review and Scientific Publication and International Peer Review Week 2017_0_0.pdf