Research integrity and misconduct in science: A report from the 2017 Peer Review Congress

2017 Peer Review Congress

The Editage team was one of the exhibitors at the Eighth International Congress on Peer Review and Scientific Publication held in Chicago from September 9 to September 12, 2017. For those who were not able to attend the Congress, our team presents timely reports from the conference sessions to help you remain on top of the peer review related discussions by the most prominent academics.

The first day of the Eighth International Congress on Peer Review and Scientific Publication was eventful. In the studies presented by various groups during the first half, biases associated with conflicts of interest, peer review, reporting, and publication of research were discussed. Post lunch, the focus shifted to discussions about integrity, misconduct, and data sharing.

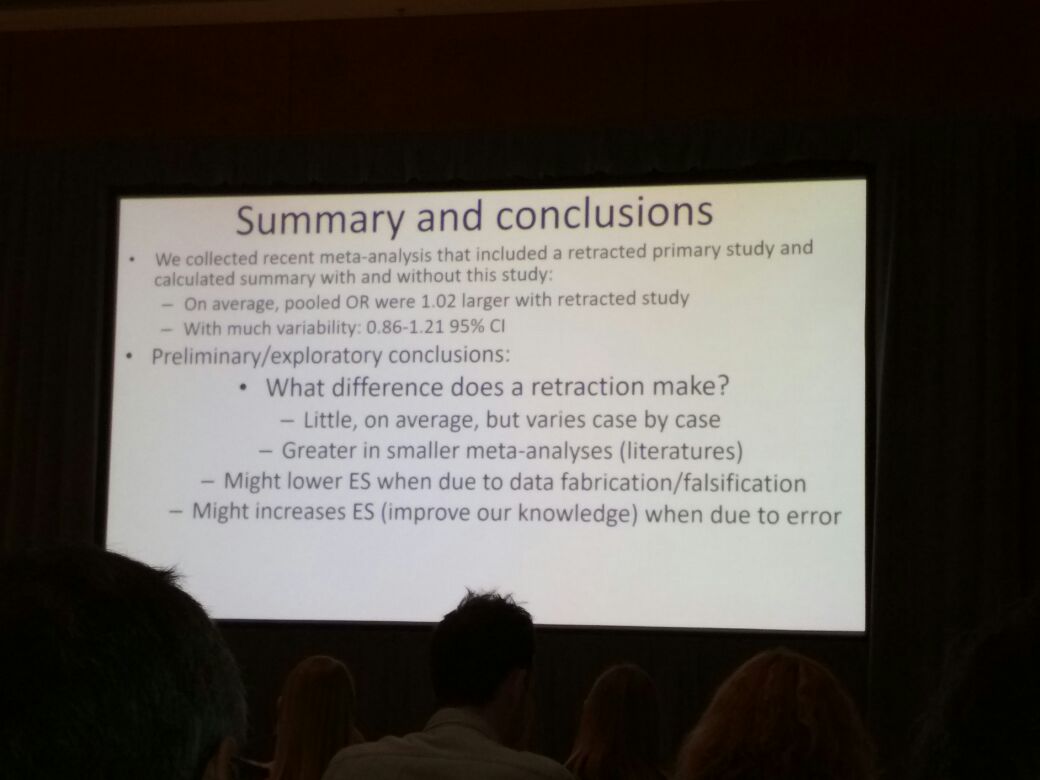

Daniele Fanelli from Meta-Research Innovation Center at Stanford (METRICS) got the ball rolling as he revealed some shocking numbers from previous studies that estimated the epistemic cost of a retraction to be close to 400,000 USD/article. Between 1992 and 2012, 58 million USD (<1% NIH budget) had been spent on retractions. Their study identified 3384 potentially retracted articles from the Web of Science (WOS) that had been cited 83,946 times of which 1433 records were identified as meta-analyses (MAs) or Systematic Reviews.

Of the 109 potentially relevant MAs published in 2016, the group analyzed 17 MAs across multiple biomedical research areas. The summary effect sizes in these MAs were calculated (as a ratio of odds ratios before and after retraction). It highlighted the impact of a retraction on a particular research topic after a study had been removed from a primary pool of studies. The session led a lively discussion about the need to carry out such studies regularly and work on reducing the costs of retractions.

Results of Daniele Fanelli’s study about the effect of retractions on meta analyses

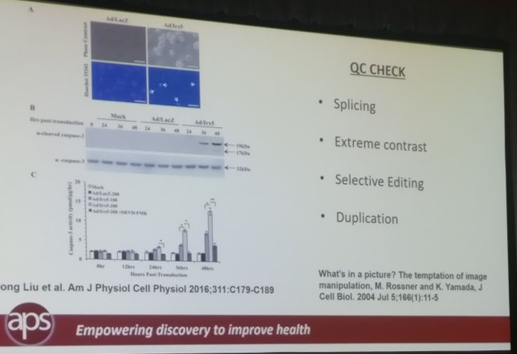

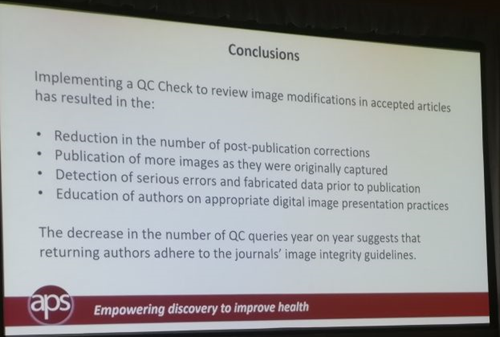

Christina Bennett in her role as Publication Ethics Manager at the American Physiological Society (APS) discussed how APS was conducting Quality Control on images in Biomedical Publishing for 7 of their 15 journals that publish 1500-2000 articles each year. It is very common for a biology research paper to have images of gels, blots, cells, etc. A QC process is necessary to ensure that these images are not doctored and APS had achieved a smooth workflow for 7 of their journals. Their QC checked images for splicing, extensive contrast adjustment, selective editing, and duplication.

To ensure a smooth workflow, APS conducted QC on images from submitted articles after a paper had been accepted but before it was published. After the establishment of their in-house artwork assessment department in 2009, they implemented the manual workflow for all 7 journals. APS issued ethical rejection for tentatively accepted papers wherein authors could not submit the original captures. In addition to the rejection, repeat offenders were uninvited from submitting articles to APS journals and their institutions were informed of their conduct.

APS QC check for images in biomedical studies

Christina’s talk led to a spirited discussion about the use of forensic tools in tandem with manual reviewing by image analysis experts to fast-track such reviews. Considering smaller journals are unable to afford artwork assessment departments, the option of asking peer reviews to analyze these images was also discussed. When an experienced medical writer from the audience asked a question, Christina said that APS encourages authors and medical writers to inform the journal when an image is modified, explaining how and why the image was modified (for example: contrast in an image was adjusted to highlight fluorescence in some cells).

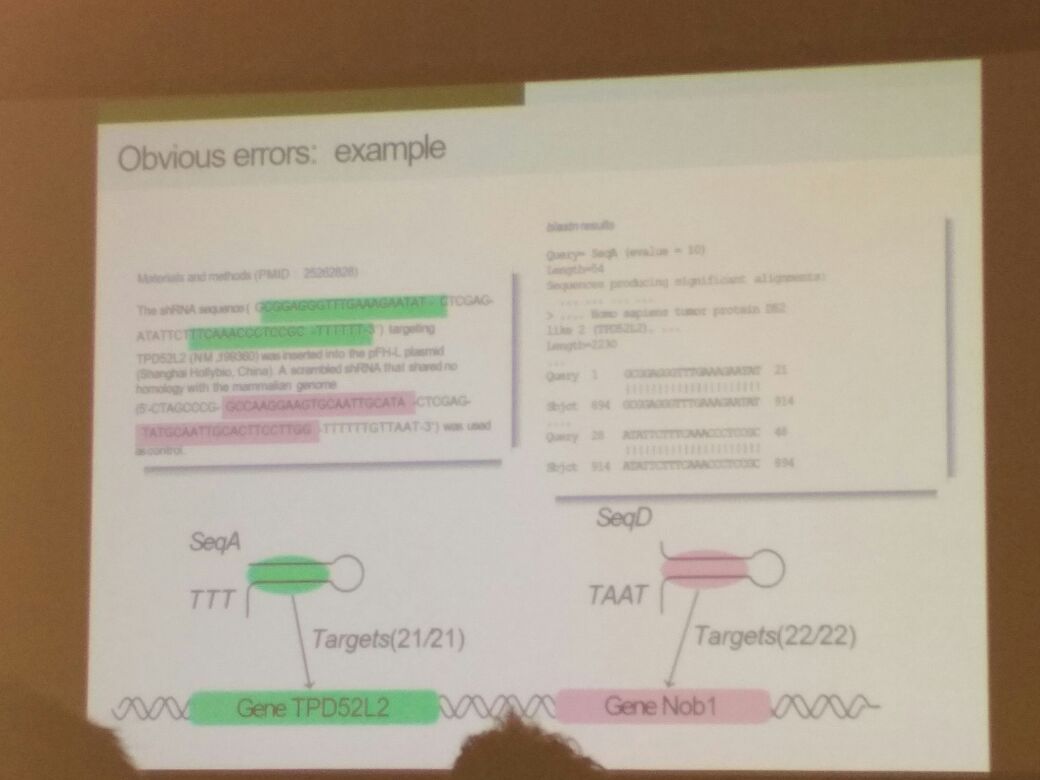

Cyril Labbé from the University of Grenoble Alpes in France was next in line to take the podium. He discussed his work in collaboration with Jennifer Byrne from the Children’s Hospital of Westmead in Australia. He discussed the use of NCBI’s Seek & Blastn Tool to verify reported nucleotide sequences in molecular biology studies. Gene knockdown studies and studies involving cancer cell lines often require synthesized nucleotide sequences. The semi-automated Seek & Blastn Tool is designed to identify incorrectly reported nucleotide sequences from published literature and help avoid reproducibility issues in molecular biology. The results of their studies revealed that the tool is far from being perfect or fully automated; however, it provided significant support and reduced manual analyses by experts. Text mining and analysis tools had limitations in extracting nucleotide sequences from PDFs, which have become popular. Methods and materials reported as supplemental data are often in PDF format.

How the Seek & Blastn Tool works to identify obvious errors: Cryil Labbé

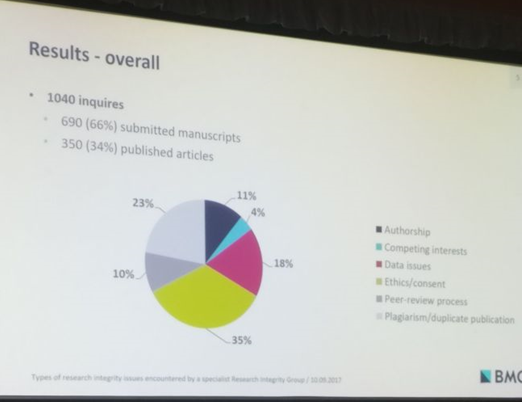

Stephanie Boughton from BioMed Central’s specialized Research Integrity Group (RIG) delivered the final presentation pertaining to integrity and misconduct. Her study categorized and determined the frequency of various research integrity issues that were encountered by BMC’s RIG covering 300 biological and medical journals. Her findings were very interesting and showed that queries regarding ‘ethics and consent’ had the highest frequency before publication, whereas issues with the integrity of data were more common for published articles.

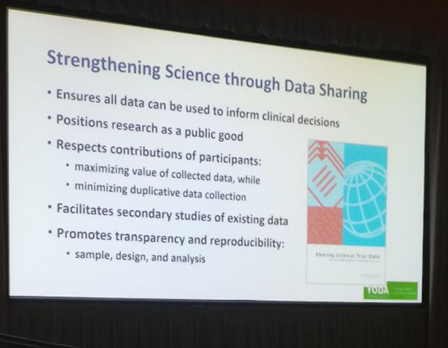

After a coffee break, data sharing sessions took over the early hours of Sunday evening. Sarah Tannenbaum, a third-year medical student from Yale University, presented early findings from a survey for Clinical Trial investigators and their experiences with relatively new data sharing policies. Although data was being shared as per the journal data sharing requirements, the willingness of authors to share depended on the type of request and intent to publish similar analyses. Joseph Ross from Yale University shared early experiences of the Yale University Open Data Access (YODA) project that demonstrated a demand for shared clinical research data as a resource for investigators. His presentation also highlighted the importance of understanding best practices and incentives for researchers and funders to ensure that data sharing is a success in the future.

What YODA does: Joseph Ross

The final session on data sharing by Deborah Zarin from ClinicalTrial.gov shared data about the intent of researchers to share Individual Participant Data (IDPs). Data from her survey showed that only 13.3% of respondents responded positively to sharing IDPs. An in-depth interaction with these respondents showed that most of them did not understand what they were saying yes to. She concluded that considerable cultural and scientific changes were required to ensure that sharing of IDPs becomes common practice.

The last session of Day 1 was conducted by Aileen Fyfe, Professor of Modern History at the University of St. Andrews. The session was very engaging as she spoke about the history of peer review between 1865 and 1965. Back then, the term ‘peer review’ was not even coined. It was simply called refereeing. Some of the results she shared were fascinating, making it perhaps the most engaging history lecture of all time. The day concluded with an opening reception as participants were allowed to unwind and network over some wine, beer, and nibbles.

Tomorrow promises to be an exciting day and we will be covering all the sessions live. Look for our daily update tomorrow to know what the experts are saying about quality of reporting, scientific literature, trial registration, and funding/grant review.

Related reading:

- Biases in scientific publication: A report from the 2017 Peer Review Congress

- Quality of reporting in scientific publications: A report from the 2017 Peer Review Congress

- Exploring funding and grant reviews: A report from the 2017 Peer Review Congress

- Quality of scientific literature: A report from the 2017 Peer Review Congress

- Editorial and peer-review process innovations: A report from the 2017 Peer Review Congress

- Post-publication issues and peer review: A report from the 2017 Peer Review Congress

- Pre-publication and post-publication issues: A report from the 2017 Peer Review Congress

- A report of posters exhibited at the 2017 Peer Review Congress

- 13 Takeaways from the 2017 Peer Review Congress in Chicago

Download the full report of the Peer Review Congress

This post summarizes some of the sessions presented at the Peer Review Congress. For a comprehensive overview, download the full report of the event below.

An overview of the Eigth International Congress on Peer Review and Scientific Publication and International Peer Review Week 2017_3_0.pdf

Published on: Sep 12, 2017

Comments

You're looking to give wings to your academic career and publication journey. We like that!

Why don't we give you complete access! Create a free account and get unlimited access to all resources & a vibrant researcher community.